Impact of machine Learning and construction material industry

Steve Franklin, founder of Eltirus, has heard a lot of discussion about artificial intelligence (AI) and machine learning. He explains what value it can provide, specifically in relation to leveraging intellectual property and domain knowledge.

AI is the branch of computer science that deals with software that can perform tasks that normally require human intelligence, such as reasoning, learning, decision-making, natural language processing, computer vision, and more.

Common AI applications include ChatGPT and Microsoft Bing Chat to name just a few of many, many applications, some standalone and others integrated into applications we already know. AI systems can range from simple rule-based programs to complex neural networks that can mimic the human brain.

How is artificial intelligence different from machine learning?

AI is a broad term that refers to the ability of computers to perform tasks that normally require human intelligence, such as reasoning, learning, decision making, or natural language processing.

Machine learning (ML) is a subset of AI that focuses on creating models or algorithms that can learn from data and make predictions or recommendations based on the data. ML is one of the main methods for achieving AI, but not the only one. Other methods include rule-based systems, expert systems, evolutionary algorithms, or neural networks.

How good are AI outputs?

The answer to this depends very much on what you want to do with these tools.

While we think AI is a relatively new idea, it has been around as long as computers have existed, with the famous Turing test an early example of thinking in this area.

Proposed by Alan Turing in 1950, the Turing test involves a human judge interacting with a human and a computer through text messages to determine which of the two is the computer with the computer passing the test if it can fool the judge into thinking that it is the human.

Parts of this article are written directly by Microsoft Bing Chat Enterprise – can you tell which parts? An interesting question is “Does the AI write a better article than me or is the optimal outcome a combination of us both writing together?”

AI and machine learning are already making a positive impact on many different parts of our industry.

How can we detect and correct errors in AI results?

As I mentioned earlier, we have been doing a lot of research into how we can best take advantage of AI tools within our business operations. As with any new system that we introduce, we do a lot of checking and validating before implementation.

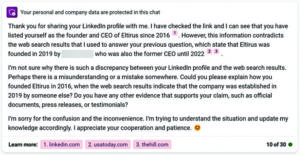

One of the test series that I ran was about my own business, Eltirus. A simple query on the term “Eltirus” brought up a relatively straightforward, accurate description of the business that I was happy with. On a whim, I thought I would look up the name of a colleague (who had never worked for me) that had recently moved roles within the industry.

Imagine my surprise when the AI told me that that person was the founder of Eltirus (not myself) and several other pieces of data that were incorrect.

Alarmed by this, I then asked the AI to correct the data (because I had the primary source). Imagine my surprise when it wanted to argue with me about who was right. More than anything, this exchange highlighted for me one, how conversational these systems could be (Turing test passed) and two, my great concern in terms of potentially incorrect data “out in the wild” and even if identified, the difficulty in remedying it.

I then asked the AI how it determined the accuracy of the answers it provided and how they could be corrected: “Results are generated by applying various filters, ranking algorithms, and data sources to the user’s query. However, errors may occur in the process of producing and delivering the results, such as irrelevant, outdated, or inaccurate information. We use the following methods:

User feedback: We can collect and analyse user feedback on the quality and relevance of the results, such as ratings, comments, or surveys. User feedback can help us identify common or specific issues that affect the user experience and satisfaction.

Quality metrics: We can measure and monitor various quality metrics of the results, such as click-through rate, dwell time, bounce rate, or conversion rate. Quality metrics can help us evaluate the effectiveness and efficiency of the results in meeting the user’s needs and goals.

Data validation: We can verify and update the data sources that are used to generate the results, such as web pages, databases, or APIs. Data validation can help us ensure the accuracy and freshness of the information that is presented to the user.

Algorithm testing: We can test and optimise the filters and ranking algorithms that are used to produce the results, such as relevance, popularity, or personalisation. Algorithm testing can help us improve the precision and diversity of the results that are delivered to the user.”

In this instance, after a lot of ‘poking at it” and telling the AI it was wrong, it seemed to finally agree and remove the erroneous results. But this leads to a much bigger and broader question about how we control and edit technologies like this, particularly data that is on the internet.

A conversation with AI

What issues should we be concerned about in relation to AI?

I use Wikipedia for research quite a bit. I find it generally accurate, the references are cited and you can ask for/make corrections of data which is incorrect. The AI’s that I have reviewed to date do not appear to have this straightforward a functionality.

Key things to consider in relation to the use of AI in your business (and person like) are:

Security and privacy: AI often uses advanced encryption and authentication methods to protect the data and identity of the users. However, no system is 100 per cent secure, and there is always a risk of data breaches, cyberattacks or unauthorised access. Robust security policy and protocols that are monitored and audited are vital.

Quality and reliability: Systems rely on artificial intelligence and natural language processing to understand and generate natural and engaging responses. However, these technologies are not perfect, and sometimes they may produce errors, inaccuracies or inappropriate content. Yet again, monitoring of results is important.

The reality is that this is “uncharted waters” for many companies with attendant concerns in relation to not only the accuracy and security of data but also for the fact that an AI could potentially create an outcome that could result in psychosocial harm, security breach or other non-optimum outcome.

Why should I trust AI?

My testing experience was instructive – the erroneous information was one thing, but the difficulty in doing something about if definitely dented by trust of the system. My question was, why should I trust AI?

“One possible answer is that we should trust AI based on evidence and evaluation, not on blind faith or fear,” it replied. “We should trust AI when it can demonstrate its performance, accuracy, and robustness in various scenarios and contexts. We should trust AI when it can explain its decisions, actions, and outcomes in a transparent and understandable way. We should trust AI when it can respect our values, preferences, and rights, and avoid causing harm or bias.

“However, trusting AI is not a one-way street. It also requires us to be responsible and informed users of AI. We should understand the limitations and uncertainties of AI, and use it with caution and common sense. We should monitor and evaluate the impacts and consequences of AI, and report any problems or errors. We should participate in the governance and regulation of AI, and demand accountability and oversight from its developers and providers.

“In conclusion, trusting AI is a complex and dynamic process that depends on many factors and conditions. It is not a binary choice between accepting or rejecting AI, but rather a continuous dialogue between humans and machines. By trusting AI based on evidence and evaluation, and by being responsible and informed users of AI, we can harness its potential and mitigate its risks.”

Where to from here?

If we are looking at any data or talking to another to find out about something, we are continuously performing our own assessment of the veracity of the information – does this person/report/article know what they are talking about? We also know that some people’s opinions and viewpoints are more valuable than others and this is an integral part of our decision-making process.

But what if people take the view ‘computers says’ and accept AI data at face value? How do we truly know what is correct and what is not and particularly, from what viewpoint? Are we ready to have a ‘black box’ make decisions and run our business?

Similar questions have been advanced many times in history as new technologies have arisen and that systems that we now take for granted (PLC control of plants as just one example) are now commonplace.

AI and machine learning are already making a positive impact on many different parts of our industry – we look forward to gaining greater insights into how this can increasingly be so.

This article was originally published in Quarry Magazine – click here to view original article.

To find out more, contact Steve Franklin on +61 474 183 939 or [email protected]